Last time we saw we could speed up memory allocations dramatically by using a pool allocator. I had a nagging feeling though. My pool allocator uses a std::vector, and it's doing all kinds of things behind the scenes. Resizing on the fly is cheating and undesired. Another thing that's certainly not right is that we're manually calling the destructor, while the object is alive and well, and present in the vector. This ultimately leads to the double destruction of objects once the pool is destructed; the destructor of the pool destructs the vector, which in turn destructs all objects it holds—effectively destructing objects twice. Double destruction is not a problem when your destructors are coded neatly, but it is something that normally is not supposed to happen. That's a big FIXME remark that needs to be addressed.

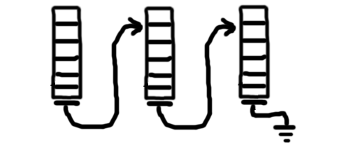

As a proposed fix for the problem, let's do away with std::vector altogether. Let's change the vector into a fixed size array. Upon initialization, the space for the array is preallocated, but no objects are created, no constructors are being run. When an object is allocated from the array, its constructor is invoked via placement new. When the (fixed size) array runs out space, allocate a new ‘subpool’ and chainlink them together as a linked list.

When an object is freed, you can look at the address and work out which array slot it came from. When all is well, manually call the destructor. If that pointer came from somewhere else, generate a fatal error.

When an object is freed, you can look at the address and work out which array slot it came from. When all is well, manually call the destructor. If that pointer came from somewhere else, generate a fatal error.

This is a slab allocator. There is an opportunity here to implement garbage collection, and it's not as difficult as it may seem. All the allocator needs to do is keep track of which slots are allocated, and which slots are free—and it already needs to do that anyway. Once we get that right, garbage collection is simply destroying any objects that are still lingering around when the pool is destructed.

In order to track which slots are free, you could keep a small bitmap. If there are eight slots per slab, the bitmap is as small as one single byte. If you choose a single 64-bit int for a bitmap, the slab can hold 64 slots. That's a big slab. Personally, I went for sixteen objects in a slab.

Although a bitmap is perfectly okay for tracking free slots, you'll need to run through lots of bit shifting loops for the bookkeeping. The performance can be greatly improved by employing a different technique.

Although a bitmap is perfectly okay for tracking free slots, you'll need to run through lots of bit shifting loops for the bookkeeping. The performance can be greatly improved by employing a different technique.

I know the Linux kernel internally uses a slab allocator, and therefore I took a peek in the book Understanding the Linux Kernel. It's in there alright, but unfortunately I couldn't make any sense of it. This stuff is hard. Or maybe not so much, I did find a rather good explanation on the web in the documentation on kernel.org.

What happens in the kernel is that each slab has a freelist, which is an array of integers (since we store only small numbers, these can be bytes rather than 64-bit ints). Each entry in the array represents an index to the next free slot. So, the index functions somewhat as a pointer to a list of free objects.

The final entry in the freelist array is -1, marking the end, when the slab runs out of memory. Additionally, there is an integer free_slot which denotes the first free slot. Since this is always the index of the first free slot, all the allocator does is access this number when an object is requested. It doesn't even have to search a free block, it already knows which object slot is free.

It's not described exactly in the kernel documentation, but at this point you should mark the freelist slot as being in use. Again, this is important for garbage collection.

[In the kernel source the member is actually called free, which in my code would inconveniently clash with the std::free() function, as well as my Slab<T>::free() method. Even though C++ has namespacing I still went with free_slot instead.]

The final entry in the freelist array is -1, marking the end, when the slab runs out of memory. Additionally, there is an integer free_slot which denotes the first free slot. Since this is always the index of the first free slot, all the allocator does is access this number when an object is requested. It doesn't even have to search a free block, it already knows which object slot is free.

It's not described exactly in the kernel documentation, but at this point you should mark the freelist slot as being in use. Again, this is important for garbage collection.

[In the kernel source the member is actually called free, which in my code would inconveniently clash with the std::free() function, as well as my Slab<T>::free() method. Even though C++ has namespacing I still went with free_slot instead.]

For illustration purposes, consider what happens when we have a slab allocator with four object slots. Upon initialisation:

free_slot: 0

free_list: { 1, 2, 3, END }

Now, let's allocate an object.

free_slot: 1

free_list: { IN_USE, 2, 3, END }

Let's allocate one more.

free_slot: 2

free_list: { IN_USE, IN_USE, 3, END }

When memory is freed, look at the address to find out to which slab it belongs, and which slot it is. The free_slot pointer points at the first next free object; insert this number into the freelist slot. This effectively marks the slot as free again. If the slot was not marked as in use, something would be terribly wrong and the program should abort immediately. The free_slot pointer now becomes the slot number itself, thus the first free object is the object we just freed. Note how the freelist works in a LIFO fashion.

When we free the first allocated object, the result is:

free_slot: 0

free_list: { 2, IN_USE, 3, END }

If we want to garbage collect at this point, we can easily see that the object in slot #1 is still hanging around. We can garbage collect by freeing it. The situation then becomes as follows:

free_slot: 1

free_list: { 2, 0, 3, END }

The freelist now appears shuffled, but that doesn't matter; it operates as a LIFO queue. If we now allocate an object again, it fetches the object in slot #1.

Managing the freelist like this is deceivingly simple. This slab allocator greatly outperforms our previous solution.

Combining everything said here, the data structure in code is:

Combining everything said here, the data structure in code is:

template <typename T>

struct Slab {

Slab<T>* next;

signed short free_slot;

signed char free_list[NUM_OBJECTS];

T objects[NUM_OBJECTS];

};

What I initially did here is use fixed size arrays. In the Linux kernel however, the only constant is the size of the memory page for the slab. It calculates how many objects of the desired object size can fit onto that page, taking into account the size of the metadata and necessary alignment. It crams as many objects as possible into a page, but note that the precise amount is different for every type T. For instance, many small objects of type A may fit into a single memory page, while another slab can hold a few large objects of type B.

The Linux kernel improves efficiency of the allocator even more by keeping three separate lists to manage slabs:

lists for empty, full, and partially full slabs. On top of that, it keeps caches of slabs, so it always has quick access to them in case of need.

I simply couldn't resist the temptation, so the updated version is:

I simply couldn't resist the temptation, so the updated version is:

template <typename T>

struct Slab {

Slab<T>* prev, *next;

unsigned short num_objects, num_free;

unsigned short free_slot;

// compiler may insert padding here

// free_list is directly behind the struct in memory

// objects are behind the free_list in memory

};

Slab<T>* empty, *full, *partial;

[Now, num_objects isn't really a property of the slab; it's a property of the slab type. So why is it implemented as such? The reason is it's not a straightforward constexpr due to alignment, and the freelist having a maximum limit (we're using bytes as slot index, that's only 8 bits). The slab is written as a self-contained object allocator, and therefore it knows how many objects it can contain. In the Linux kernel, each slab also knows its object size. Since we're using C++ templates here, we can simply get away with sizeof(T).]

And you know what they say, safety first. The metadata is kept inside the slab, as well as the objects that are handed out to the application side of things. A badly behaving application may well overwrite other objects, or worse, our freelist. To guard against this, my slab allocator implements red zoning and object markers; magic numbers are inserted in between, and they are checked every single time.

For game code, the extra checks are #ifdef-ed out in a release build, but for other kinds of applications it's probably best to leave them in anyway.

Moreover, be mindful that a memory allocator should always zero the memory: not only when handing out objects, but also when they are freed. If you don't, you will get a massive Heartbleed disaster—the baddest security bug of 2014, the decade, and maybe of all computing history.

For game code, the extra checks are #ifdef-ed out in a release build, but for other kinds of applications it's probably best to leave them in anyway.

Moreover, be mindful that a memory allocator should always zero the memory: not only when handing out objects, but also when they are freed. If you don't, you will get a massive Heartbleed disaster—the baddest security bug of 2014, the decade, and maybe of all computing history.

Happy holidays, and be careful with fireworks on New Year's Eve. You will need those fingers to do some more programming in 2015.