Not so long ago, I wrote a rather large Python code, which turned out to be a very useful tool. It was thousands of lines of Python, but I wrote it in only a couple of weeks. I wrote it "with my eyes closed", churning out the code at a high rate, and yet the number of bugs was small and they were always easy to resolve whenever problems did occur. This is the power of Python. The ease at which you can produce useful, working code is simply amazing. Writing such a program in C or C++ would take much longer, months, maybe as long as a year. Skill has little to do with it. The language simply is easy on the developer. It makes things easy.

Python is a fine language, and while its performance is quite alright, when compared to the raw power of C or C++ it just doesn't compare. Neither would I be comfortable creating something like a game in Python. While this is perfectly possible, my personal experience directs me to libraries like SDL, OpenGL, OpenAL, and what not.

At the same time, a language like C++ is perfectly suited for creating games, except that the language itself is the cause of lots of stress. C++ is not an easy language. It is overly politically type-safe correct, to the point where a little word like 'const' or a wrongly placed & sign has a completely different meaning and makes your program do weird unintended things. C++ pros may laugh at me, but consider the fact that I'm actually using C++ professionally, too.

In C++, I can almost philosophically ponder all day long whether a certain class should be implemented this way or another. While the beauty of the design is worth investing precious time in, are you getting any work done? And time is precious indeed. If I had to choose between easily writing a working program in Python, or struggling with C++ just to make the compiler happy, I'd choose Python any day. If only I could bend C++ to make it play nice, make it see things my way ... and you actually can. C++ is a very odd (but cool) language, which lets you redefine operators to make it behave the way you want. Insanity, it even lets you redefine the "()" parens and the "->" deference 'operator' ! (gasp!)

The past weeks I've been working on this library which implements some basic objects like a Number, a String, an Array, a List, and a Dictionary. These exist in the STL, but are not quite like how I would like them to be -- like Python. Anyway, what an adventure..! The undertaking was heavier than anticipated. Each class is derived from a base class named Object, which is the base of everything.

C++ has this cool feature that when a local instance goes out of scope, it calls the destructor. So you have some kind of automatic memory management. But wait, it's much more complicated than this. What if we added our String to a List and the String goes out of scope? Boom, the program would break. Oddly, when you use new to allocate an Object, it remains in existence and the destructor is not being called. Of course, you say, this is basic C++. Now, we use this feature to allocate a backend for our String 'proxy' object. The proxy may go out of scope, but the backend remains. The backend is not deallocated until a reference count drops to zero. Yes, adding it to the List increments the reference count in the backend of the added Object.

Using this library, we can now write code that looks a lot like Python code, but is really compiled by a C++ compiler. The performance is less than optimal, but surely better than Python's. Is everything ideal now? Umm no, I ran into a problem where the compiler lost track what type of object it was really dealing with, and I had to resort to static_cast<>()ing the thing to the correct type. C++'s type-correctness is really annoying. One other thing is that adding a new class is hell. You need to implement both a proxy and a backend class, and you need to implement lots of methods (low-level stuff like operator=(const Object&)) to make it work. Of course, your new class will have new methods, and you will have to use dynamic_cast<>() on the pointer to the derived backend a lot, which is coding hell.

Although I succeeded (more or less) in implementing a Python-esque library to ease writing C++ code, I'm now considering dropping the idea of mimicking Python and moving away from a copy-by-value paradigm to a more efficient pointer-oriented solution, much like Objective-C has. In Objective-C, everything is a pointer to an instance -- you just hardly realize it due to its syntax. Objective-C code is not as easy to write as Python, but I really dig its nice API and its convention of having really long descriptive names for members.

In Objective-C, everything is an Object, and it has 'manual' reference counting via two calls: retain and release. Retain/release is not super-easy but it does help a lot when compared to old-fashioned C memory management. This may be combined with an autorelease pool to easily deallocate unused objects. Objective-C has another feature that is extremely useful: calling a non-existant method in an instance (because the var is nil, for example) results in nothing being done. No program crash, it simply ignores the fact. I yet have to think about how one could mimic this behavior efficiently. This time my weapon of choice would be standard C. Ah, the joys of good old plain C.

Now that we have entered the multi-core age, we should all be writing multi-threaded code. Even though it's nothing new, I'd still like to devote a (another) blog post to it. I came up with an extremely generic model that applies in most cases. It is based on the producer-consumer model, but with a twist. The standard producer-consumer is often a bit too simplistic in practice.

Now that we have entered the multi-core age, we should all be writing multi-threaded code. Even though it's nothing new, I'd still like to devote a (another) blog post to it. I came up with an extremely generic model that applies in most cases. It is based on the producer-consumer model, but with a twist. The standard producer-consumer is often a bit too simplistic in practice.

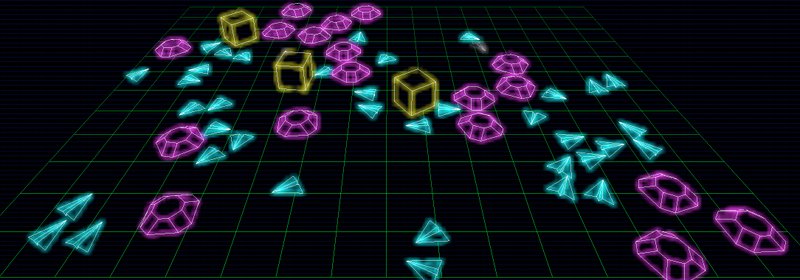

In 3D games, models consisting of thousands of vertices and polygons, are rendered to screen at least at 30 frames per second. For every frame, these vertices are transmitted from the memory to the GPU over the bus. For static models, this is a huge waste of time and bus bandwidth, because the vertices are the same for every frame. Why not cache the data in graphics memory and tell the GPU to get it there? This is what vertex buffer objects (VBOs) are all about.

In 3D games, models consisting of thousands of vertices and polygons, are rendered to screen at least at 30 frames per second. For every frame, these vertices are transmitted from the memory to the GPU over the bus. For static models, this is a huge waste of time and bus bandwidth, because the vertices are the same for every frame. Why not cache the data in graphics memory and tell the GPU to get it there? This is what vertex buffer objects (VBOs) are all about.

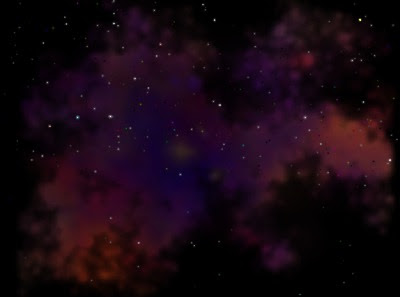

Last time, I talked about how to render a random nebula. As a final remark, I stated that the nebula had no shape; the plasma simply fills the entire image to its boundaries. The result is that you end up with a texture that is unusable; you can not have a nebula in the starry sky that is shaped perfectly like a square. So, we need to give it a random shape.

Last time, I talked about how to render a random nebula. As a final remark, I stated that the nebula had no shape; the plasma simply fills the entire image to its boundaries. The result is that you end up with a texture that is unusable; you can not have a nebula in the starry sky that is shaped perfectly like a square. So, we need to give it a random shape.

As I wrote in my previous post, I'm playing with a random 3D star field lately. To this star field, I like to add some nebulae to fill the black void of space. Previously, I worked with dozens of photos of existing nebulae from NASA. This time, I decided I want the scene to be entirely fictional, so there is no place for real nebulae.

As I wrote in my previous post, I'm playing with a random 3D star field lately. To this star field, I like to add some nebulae to fill the black void of space. Previously, I worked with dozens of photos of existing nebulae from NASA. This time, I decided I want the scene to be entirely fictional, so there is no place for real nebulae. Although it's just a boring gas cloud, I'm quite happy with it. Not everything is well though; the plasma renders into a square and therefore, there is a square nebula in the sky. To make it perfect, the nebula must be given a random shape and fade away near the edges of the texturing square.

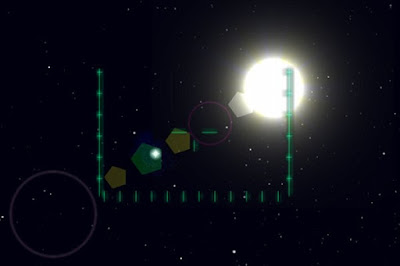

Although it's just a boring gas cloud, I'm quite happy with it. Not everything is well though; the plasma renders into a square and therefore, there is a square nebula in the sky. To make it perfect, the nebula must be given a random shape and fade away near the edges of the texturing square. I've been playing with a 3D star field lately, and what it needs, of course, is a little space ship so that you can fly around. Sounds easy enough, but it's actually quite confusing.

I've been playing with a 3D star field lately, and what it needs, of course, is a little space ship so that you can fly around. Sounds easy enough, but it's actually quite confusing.